Reposted by Gary Marcus

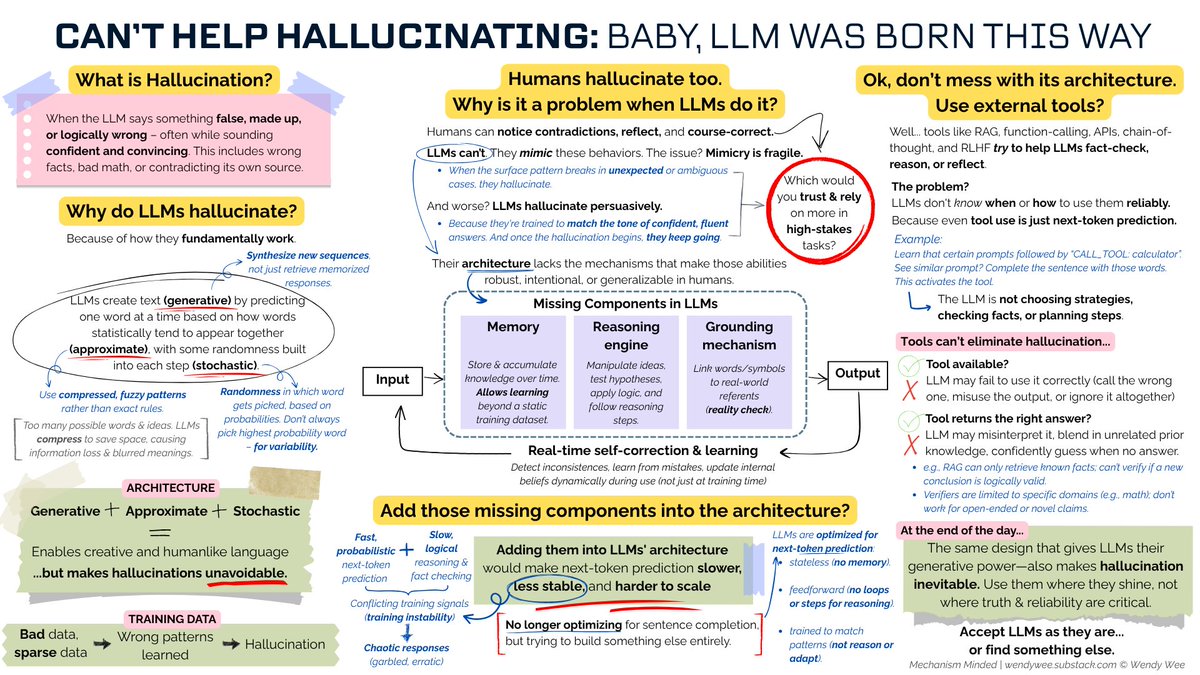

The statement and accompanying conversation discuss the inherent nature of hallucinations in Large Language Models (LLMs) and their implications. It highlights the architectural reasons for hallucinations and compares them to human cognition, emphasizing the challenges in eliminating such behavior. The conversation aims to inform and clarify misconceptions about LLMs, contributing to public understanding of AI technology.

- The statement aims to inform and clarify the nature of LLM hallucinations, striving to do no harm by providing accurate information. [+1]Principle 1:I will strive to do no harm with my words and actions.

- The conversation promotes understanding by explaining complex AI concepts in an accessible manner, fostering empathy towards the limitations of LLMs. [+2]Principle 3:I will use my words and actions to promote understanding, empathy, and compassion.

- The discussion engages in constructive dialogue by addressing potential misconceptions and providing a balanced view of LLM capabilities and limitations. [+1]Principle 4:I will engage in constructive criticism and dialogue with those in disagreement and will not engage in personal attacks or ad hominem arguments.